- cross-posted to:

- technology@lemmy.ml

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.ml

- technology@lemmy.world

Walmart, Delta, Chevron and Starbucks are using AI to monitor employee messages::Aware uses AI to analyze companies’ employee messages across Slack, Microsoft Teams, Zoom and other communications services.

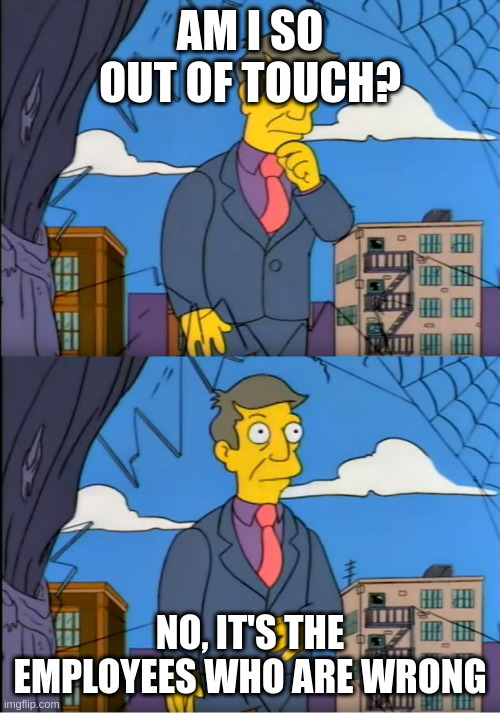

There’s going to be an article one of these days in Business Insider or something saying “employees increasingly establishing secret outside-of-the-company communication channels and sharing trade secrets over them.” And then the companies are going to get all pissy about “muh trade secritssssss” and issue nagging emails to the whole company not to set up Discords to evade their employee monitoring solution that they pay a gorillion dollars a year for. And because it was the CEO’s idea, he can’t just back down and admit it was wrong. He has to keep doubling down.

That’s a really interesting point. By forcing more surveillance of casual chat, they’re also risking confidential information being discussed on outside channels.

I would assume that for any reasonably large business, with a competant legal team, there is a certain amount of this considered to be an acceptable risk.

Employees discussing shit on your systems? You’re (most likely) legally responsible for it if things come to a court room. Employees discussing shit through their own side channels? You’ve got plausible deniability of awareness and a strong legal argument for it being outside of your responsibilty due to having no control of it.

This is literally a strategy for some shady and unscrupulous companies to attempt to avoid liability. Conduct any questionable communication over official “unofficial” third party channels.

employees increasingly establishing secret outside-of-the-company communication

Like they’ve not made a WhatsApp group already lmao

And then comes the attempts at criminilization

It’s in the business’s best interest that any questionable discussions happen outside of their systems.

The simultaneous chances of data exfiltration are a considered risk, and there are already legal actions available to the business for that aspect of it. This is effectively a solved problem.

This is already a thing. I’m part of a 25k person Discord server for Amazon/AWS employees both current and former. We often discussed a ton about the company’s inner workings, navigating the toxic AF environment, and helping people find other jobs. Nothing ever trade secret level, but that Discord would give any competitor a massive leg up in direct competition with Amazon.

former blue badge myself (port99, kitty corner from blackfoot), any idea if or how I could get in? not that I’m exactly burning with desire for it, but could be neat to see how things have changed since I left right when covid hit

I don’t think so because it requires you to provide proof you work there actively, and those who leave are assigned alumni and grandfathered in. It’s mainly just lots of PIP and toxicity that is discussed, and memeing about how dog shit things are.

word. not missing much then, haha

A lot of retailers are replacing their standard phone systems with products from Zoom and other AI transcription enabled providers. In environments with audio recording, its reasonable to assume that relatively soon, full transcripts of conversations identified to individual speakers will be easily obtained, summarized and analyzed by AI. This will hopefully soon come under scrutiny for violating both two- and one-party consent laws for audio recording.

And these companies will pay a small fine and continue doing it anyway

In a two-party-consent state, maybe.

But in a one-party-consent state, all that

PersonnelHuman ResourcesPeople Ops will do is point to a clause in your onboarding paperwork where you agreed to be recorded while on company property using company telephony equipment as a condition of your employment.As long as that’s in the employee paperwork it protects companies from some liability, but what about customer-employee or customer-customer conversations picked up by the system? I suppose a sign stating that video and audio conversations being recorded would further lessen employer liability but I imagine in the future laws about AI technology used on those convos will be put in place and the use of surreptious AI conversation analytics in retail environments will be more regulated. For now though it sure seems like a free for all and I wouldn’t be surprised at unethical use becoming somewhat common.

Wouldn’t that be legal even in a two party consent state?

A two-party-consent state requires both people to consent to being recorded. So even if you (tacitly) agree to being recorded as a condition of your employment, if the person on the other end of the conversation doesn’t then it’s an illegal recording. That’s why it probably wouldn’t be an issue in one of those states.

But in a one party state, the other person on the recording doesn’t have to consent as long as you do. So as long as you know your conversation is being recorded (again, hypothetically as a condition of employment) the other party (probably) doesn’t have any recourse should your employer use a third-party to monitor it.

Presumably this is monitoring conversations between employees, all of which would have it in their contract (I’m thinking about stuff like break rooms). In fact, even in customer facing areas, corporations would just hang a sign that says ‘for your safety we have recording equipment in the store’ or whatever.

The article specifically called out “workplace communication platforms” so my comments were more directed at companies monitoring their instances of Zoom/Slack/Teams/etc. But it’s a certain bet that Walmart will try to use AI to monitor its breakrooms to make sure nobody says the evil “U” word.

It’s already been ocurring for a while now without the summary and analysis by AI.

Automatic audio transcription has been kicking around for a long time now, at various levels of accuracy. The only important distinction of the speaking party is which side of the call (employee or customer) it’s coming from, and that doesn’t require any complicated analysis, just that your recordings capture incoming and outgoing audio on separate channels (which is how phone calls work in the first place).

As far as consent goes, any time you hear “this call may be monitored for training purposes” and stay on the line, as a customer you have consented to the recording. As an employee they usually just include it in your contract or one of the many things they get every employee to sign. Only really matters from a business standpoint if someone is willing to take you to court over it.

Most call center software/systems have these options built in at this point.

You only communicate over company channels what you don’t mind appearing in the court room. They was already the case, now it’s just more so.

There seems to be a shocking amount of people that expect any amount of privacy on corporate owned systems, property, or hardware.

As someone in tech: as much as I don’t give a singular speck of interest in spying on what you’re doing, intensive monitoring of every single thing happening within the company systems is important and useful. Often these logs are vitally necessary for things like malware detection and remediation, data exfiltration detection and investigation, investigation into system and network issues, legal investigations or action (both for us, and when subpeona’d).

The amount of data we log, and I have access to, on employees actions on our systems is disturbing. But I would be lying if said that I haven’t encountered a legitimate need for a shocking amount of it.

That and the company owns the system. I don’t think it’s unethical for them to monitor everything. The system is a tool that they let you use to facilitate your work. Nothing more.

Yup company reminds me everytine I log into their computer its not for my personal use. Only personal stuff that gets done on it is checking my 401k contributions every so often

If you own it, don’t install a damn thing your employer demands. If they want security access on a device, they pay for it.

If you don’t own it, don’t use it for a damn thing that isn’t work-related and use it minimally for that. “Yes sir” emails and submitting reports. That’s it. Don’t do research, don’t surf the web, don’t accept a single personal call, email, or text. They don’t have any right to know anything that is not work related.

deleted by creator

Edit: the deleted comment was talking about how this has legal compliance implications, effectively that companies have to do this.

Thank you for highlighting this, I was struggling myself on how to word a message like this.

I work tech in a heavily regulated type of business, and have been neck deep in work on things with legal compliance considerations lately.

The issue with your “corporate snap chat” idea is that it would inevitably be used to share information relevant to potential legal proceedings.

Any space like that needs to be out of the business’s control and view to provide a legal air gap for any responsibility. Businesses would prefer their employees do that shit where they can safely argue no control or responsibility over it.