Sorry, mixed up the videos. It’s actually this one, from 2014:

https://www.destroyallsoftware.com/talks/the-birth-and-death-of-javascript

Edited link above

Sorry, mixed up the videos. It’s actually this one, from 2014:

https://www.destroyallsoftware.com/talks/the-birth-and-death-of-javascript

Edited link above

Not sure how ollama integration works in general, but these are two good libraries for RAG:

That’s a great line of thought. Take an algorithm of “simulate a human brain”. Obviously that would break the paper’s argument, so you’d have to find why it doesn’t apply here to take the paper’s claims at face value.

There’s a number of major flaws with it:

IMO there’s also flaws in the argument itself, but those are more relevant

This is a silly argument:

[…] But even if we give the AGI-engineer every advantage, every benefit of the doubt, there is no conceivable method of achieving what big tech companies promise.’

That’s because cognition, or the ability to observe, learn and gain new insight, is incredibly hard to replicate through AI on the scale that it occurs in the human brain. ‘If you have a conversation with someone, you might recall something you said fifteen minutes before. Or a year before. Or that someone else explained to you half your life ago. Any such knowledge might be crucial to advancing the conversation you’re having. People do that seamlessly’, explains van Rooij.

‘There will never be enough computing power to create AGI using machine learning that can do the same, because we’d run out of natural resources long before we’d even get close,’ Olivia Guest adds.

That’s as shortsighted as the “I think there is a world market for maybe five computers” quote, or the worry that NYC would be buried under mountains of horse poop before cars were invented. Maybe transformers aren’t the path to AGI, but there’s no reason to think we can’t achieve it in general unless you’re religious.

EDIT: From the paper:

The remainder of this paper will be an argument in ‘two acts’. In ACT 1: Releasing the Grip, we present a formalisation of the currently dominant approach to AI-as-engineering that claims that AGI is both inevitable and around the corner. We do this by introducing a thought experiment in which a fictive AI engineer, Dr. Ingenia, tries to construct an AGI under ideal conditions. For instance, Dr. Ingenia has perfect data, sampled from the true distribution, and they also have access to any conceivable ML method—including presently popular ‘deep learning’ based on artificial neural networks (ANNs) and any possible future methods—to train an algorithm (“an AI”). We then present a formal proof that the problem that Dr. Ingenia sets out to solve is intractable (formally, NP-hard; i.e. possible in principle but provably infeasible; see Section “Ingenia Theorem”). We also unpack how and why our proof is reconcilable with the apparent success of AI-as-engineering and show that the approach is a theoretical dead-end for cognitive science. In “ACT 2: Reclaiming the AI Vertex”, we explain how the original enthusiasm for using computers to understand the mind reflected many genuine benefits of AI for cognitive science, but also a fatal mistake. We conclude with ways in which ‘AI’ can be reclaimed for theory-building in cognitive science without falling into historical and present-day traps.

That’s a silly argument. It sets up a strawman and knocks it down. Just because you create a model and prove something in it, doesn’t mean it has any relationship to the real world.

Canonical lives and dies by the BDFL model. It allowed them to do some great work early on in popularizing Linux with lots of polish. Canonical still does good work when forced to externally, like contributing upstream. The model falters when they have their own sandbox to play in, because the BDFL model means that any internal feedback like “actually this kind of sucks” just gets brushed aside. It doesn’t help that the BDFL in this case is the CEO, founder, and funder of the company and paying everyone working there. People generally don’t like to risk their job to say the emperor has no clothes and all that, it’s easier to just shrug your shoulders and let the internet do that for you.

Here are good examples of when the internal feedback failed and the whole internet had to chime in and say that the hiring process did indeed suck:

https://news.ycombinator.com/item?id=31426558

https://news.ycombinator.com/item?id=37059857

“markshuttle” in those threads is the owner/founder/CEO.

I’d be careful of pushing the narrative about computers not being a good choice for regular users. I’m going to channel a bit of Stallman and say that that’s how we end up without The Right To Read

For your bullet points:

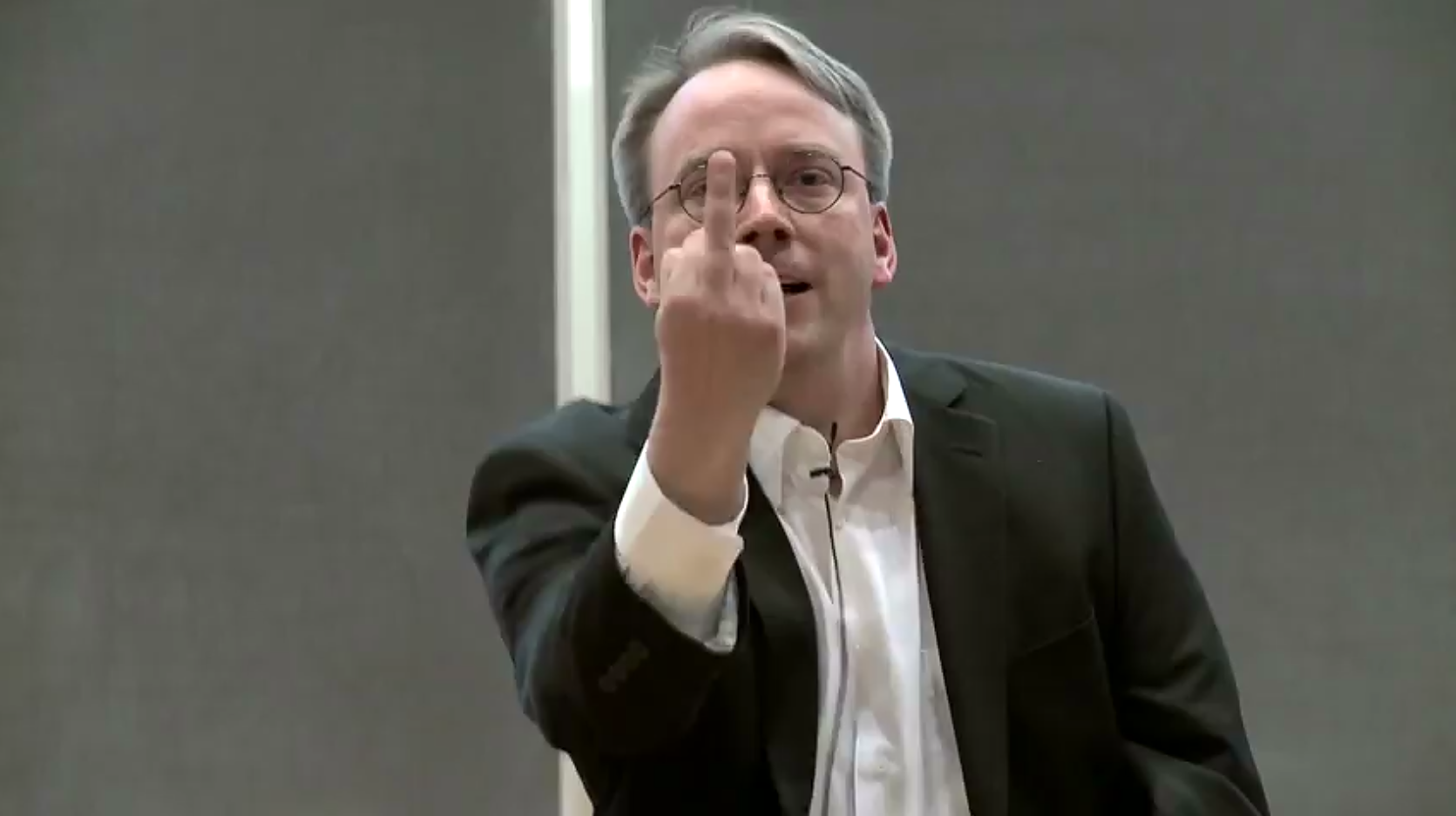

GPU issues can be hard, but that’s not really Linux’s fault. There’s a reason this image exists of Linus giving nvidia the middle finger:

That being said, it’s getting better. As of this year, nvidia has started putting some real effort into making things work with wayland.

EDIT: I’ve found nirvana with NixOS, speaking of GPU drivers. I just add a few lines to /etc/nixos/configuration.nix and it goes off and ensures that the nvidia drivers are present. I also run lots of CUDA stuff on top of that and it all works about as seamlessly as possible.

IMO Hacker News handles this better. Threads/comments are rarely deleted, they’re mostly minimized and you have to log in to expand them

Unfortunately there isn’t one easy source that I’ve found. This is based on reading the stuff you linked to, as well as discourse/matrix discussions linked to from those sources. I compare it mentally to Guido van Rossum as BDFL of Python (though not any longer). He did a much better job of communicating expectations, like here

It made some people unhappy that there was no Python 2.8, but everybody knew what was happening. The core Python team also wasn’t surprised by that announcement, unlike with stuff like Anduril or flakes for the nix devs.

There was also a failure to communicate with stuff like the PR that would switch to Meson. The PR author should have known if Eelco broadly agreed with it before opening it. If there was a process that the PR author just ignored, the PR should have been closed with “Follow this process and try again”. That process can be as simple as “See if Eelco likes it”, since he was BDFL, but the process needs to be very clear to everyone.

Exactly, thanks. “politicking” != US political issues

My take on it is that the creator of Nix was very good technically but was not a good BDFL, and that was the root of the problem. He didn’t do a good job of politicking, stepped down, and now Nix is going through a bit of interregnum. I don’t think it’s likely to fail overall though, nixpkgs is too valuable of a resource to just get abandoned. I expect the board seats will be filled by people that know how to politick, and things will continue on after that.

Lessons learned is being a BDFL is hard. IMO Eelco Dolstra failed because he had opinions about things like Anduril sponsorship and flakes, and didn’t just declare “This is the way things are going to be, take it or leave it”. People got really pissed off because there wasn’t a clear message or transparency, which resulted in lots of guessing.

They won’t open source snaps because they want to control the snap ecosystem to make money off of it for an IPO

That’s an interesting comment from a guy that used to work for Canonical, and then went anti-snap pretty hard, to the point that he made this:

I’ve noticed that as well. It silently fixed the typo when I asked it to update its previous response to fix something else.

Thanks for the list. It’d be interesting to see something like the Are We X Yet sites for Mozilla/Rust projects that tracks this sort of thing

Not sure if this my app or a Lemmy setting, but I only see it once, because it’s properly cross-posted

Relevant HN thread that was just posted:

https://news.ycombinator.com/item?id=39360724

You’re not the only one noticing it

Does anyone here actually use awk for more than trivial operations? If I ever have to have to consider writing anything substantial with bash/awk/sed/etc, I just start writing a Python script. No hate to the classic tools, but Python is just really nice.